The Role of AI in Pharma Marketing

Signal loss, privacy constraints, and shrinking HCP access have made yesterday’s playbooks unreliable. AI changes the game by turning fragmented data into next-best-action decisioning across HCP and patient journeys. Teams that build a compliant data foundation, automate content operations, and measure causal lift will see faster time-to-insight, higher message relevance, and sustained TRx growth.

Why Pharma Engagement Needs Reinvention

- Reduced Access To HCPs: Shorter visits and digital fatigue shrink windows for influence.

- Siloed Point Solutions: Web, email, CLM, EHR/POC, and field data rarely connect, limiting closed-loop learning.

- Privacy & Consent: HIPAA, GDPR/CPRA, and evolving platform policies curtail legacy retargeting.

- Slow Learning Cycles: Quarterly MMM isn’t enough; brands need rapid, experiment-driven optimization.

AI In Pharma Marketing: A Practical Overview

Artificial intelligence in pharma isn’t a single tool — it’s a stack of data, models, and workflows that turns scattered signals into compliant next-best actions across HCP and patient journeys. Done right, AI shortens learning cycles from quarters to weeks, raises message relevance at the moment of need, and ties every activation to causal, audit-ready evidence.

The Building Blocks

Results come from how the parts work together. A unified data foundation, uplift-focused analytics, claims-bound generation, orchestration, and closed-loop proof form the backbone of AI-driven engagement.

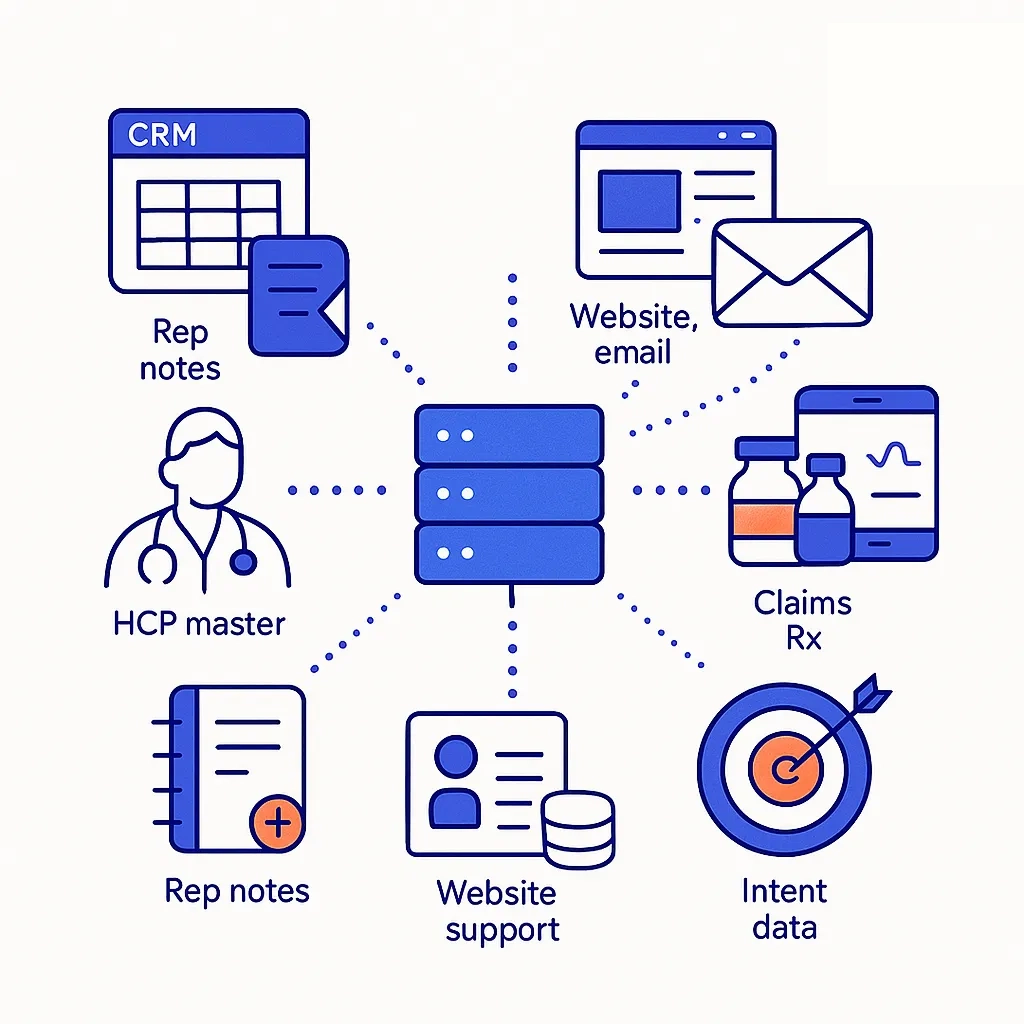

Data Foundation: Unified first-party (CRM/CLM, rep notes, websites, email, support hubs) and approved third-party data (HCP reference, claims/Rx, specialty pharmacy, EHR/POC media, intent). Identity is deterministic for HCPs (NPI) and tokenized for patients, with consent lineage captured at the source.

Predictive & Causal Analytics: Models estimate propensity to initiate/switch therapy, adherence risk, and likely decile movement. Uplift and heterogeneous-treatment-effect models focus on incremental impact rather than raw response.

Generative AI For Content Ops: LLMs modularize approved claims, create compliant variants, and summarize unstructured inputs (rep notes, med-info inquiries) when paired with retrieval from an “approved facts” library.

Decisioning & Orchestration: Policies select the next-best-action/channel/message (NBA/NBC/NBM) based on predicted lift, consent, and label constraints — then push those actions into CLM, EHR/POC, email/SMS, or field tools.

Closed-Loop Measurement: Geo/NPI holdouts, staggered rollouts, MMM+MTA triangulation, and model monitoring (drift, decay, fairness) create a continuous learn-and-prove cycle.

With the architecture defined, let’s ground it in live use cases—how field teams, EHR/POC media, omnichannel HCP programs, and patient CRM work differently when AI is in the loop.

Where AI Is Being Used Today

Here we translate architecture into outcomes: dynamic call lists for reps, context-aware prompts in care settings, fatigue-aware HCP cadence, and consent-based patient nudges tied to real access events.

Field Force Enablement: Reps receive dynamic call lists and objection-matched leave-behinds generated from approved claims. Follow-ups trigger automatically after specific engagement signals (e.g., EHR prompt acceptance, content depth).

EHR/POC Contextualization: AI filters audiences and timing, surfacing within-label reminders when care context implies relevance (e.g., prior authorization needs, payer changes), while enforcing frequency caps and audience exclusions.

Omnichannel HCP Marketing: Email, CLM, and professional social cadence adapt to predicted fatigue and content affinity at the NPI level, ensuring fewer touches that matter more.

Patient Initiation & Adherence: Consent-based CRM uses refill windows, claim rejections, and affordability flags to time SMS/email nudges and hub outreach — reducing abandonment and time-to-start without exposing PHI to external systems.

Insight Mining: LLMs summarize thousands of rep notes and medical inquiries to reveal recurring objections, unmet needs, and content gaps that shape the next wave of MLR-approved assets.

Access & Affordability: When a payer denial is detected, AI sequences checklists, prior-auth guidance, and copay messaging tailored to the specific plan/profile.

Each use case depends on trustworthy inputs. Next, we’ll detail how to source, govern, and package data so models and marketers can reuse it safely at speed.

Data Foundation: Build Once, Use Everywhere

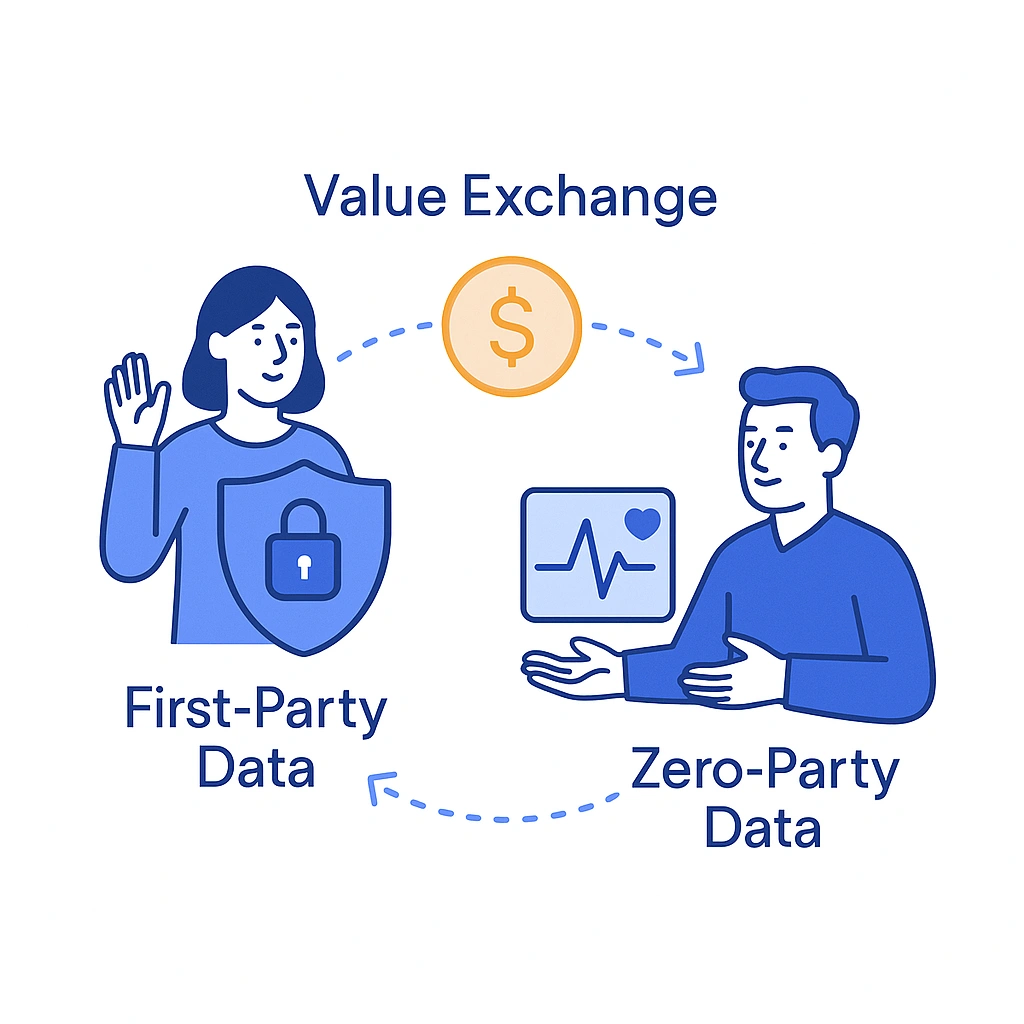

Durable AI starts with durable data — first-party signals augmented by approved third-party sources, stitched deterministically for HCPs and tokenized for patients, with consent lineage preserved.

Source And Unify

- First-Party: CRM/CLM, rep calls, websites, email, call centers, patient support hubs.

- Third-Party: HCP master data, claims/Rx, specialty pharmacy feeds, POC/EHR media, intent data.

- Identity: Deterministic HCP resolution (NPI), tokenized patient graphs, consent lineage.

Govern And Protect

- Consent & Preferences: Capture opt-in context, revocation timestamps, and channel permissions.

- PHI/PII Controls: Minimize, de-identify, and activate via clean rooms when needed.

- Quality: Freshness SLAs, anomaly detection, golden-record management.

Make It Usable

- Feature Store: Reusable model features with documentation.

- Model Registry: Versioning, drift and performance monitoring.

- Composable Audiences: Legal/label guardrails embedded upstream.

Sample Data Map

This table clarifies which signals serve which jobs — from near-real-time site/email behaviors for cadence control to weekly claims for adherence and access interventions.

| Source | Example Signals | Key/ID | Latency | Sensitivity | Primary Use Cases |

| CRM/CLM | Call notes, detail depth, objections | NPI | Daily | Low | NBA for field, NBM for follow-ups |

| Website & Email | Content affinity, recency, frequency | Email/NPI | Near-real-time | Low | Segmentation, cadence control |

| Claims/Rx | NBRx/TRx, abandonment, persistency | Tokenized patient | Weekly | High | Adherence risk, access interventions |

| Specialty Pharmacy | Rejections, prior auth status | Tokenized patient | Daily | High | Copay and affordability triggers |

| EHR/POC Media | Message acceptance, context cues | NPI/Facility | Near-real-time | Low | Context-aware HCP messaging |

| Intent/Reference Data | Condition interest, panel size, affiliations | NPI | Weekly | Low | Targeting, decile shift prediction |

Analytics That Change Behavior (Not Just Dashboards)

Segmentation and targeting become specific when you model journey states, expected time-to-treatment, and incremental lift — not just raw response.

Segmentation And Targeting

- HCP Micro-Segments: Specialty × payer mix × access barriers × content affinity.

- Patient Journey States: Likely diagnosis, initiation window, adherence risk category.

Predictive And Causal

- Propensity/Time-To-Treatment: Forecast initiation and timing to prioritize interventions.

- Uplift/Heterogeneous Treatment Effects: Match channel and cadence to individuals predicted to gain the most.

- Triangulated Measurement: MMM for budget, MTA for path insight, and geo/NPI holdouts for causal proof.

LLM-Driven Insight Mining

- Summarize Field Notes: Extract recurring objections and clinical themes without PHI exposure.

- Cluster Open Text: Surface unmet needs and content gaps to inform MLR-approved assets.

Automation That Scales Compliance

Event triggers, policy controls, claims-bound generation, and MLR tooling connect predictions to execution, with audit trails that satisfy 21 CFR Part 11.

Orchestration

From payer denials and EHR prompt views to site-behavior thresholds, orchestration selects channel, message, and frequency within guardrails and pushes them to delivery systems.

- Event Triggers: Payer denial, sample request, EHR prompt viewed, or site behavior crosses threshold.

- Policy Controls: Frequency caps, channel rotation, cooling-off periods, and audience exclusions.

To fill those pipes responsibly, content ops must modularize claims and automate compliant variants with traceable evidence.

Content Operations

A claims library, retrieval-augmented generation, and MLR-ready redlines shrink variant cycle time while preserving clinical and legal integrity.

- Claims Library → Modular Content: Snippets mapped to indication, audience, and objection type.

- GenAI With Retrieval: RAG confined to approved facts; automated variant generation with citations to source claims.

- MLR Tooling: Evidence links, redlines, and full change logs for traceability.

Automation is only as safe as its constraints. Next we’ll codify redaction, hallucination checks, and auditability.

Guardrails

Pre-prompt PHI redaction, fact-matching to approved knowledge, and full prompt/output logs ensure quality, safety, and accountability.

- Pre-Prompt Redaction: Strip PHI/PII; block disallowed topics.

- Hallucination Checks: Fact-matching against approved knowledge bases.

- Audit Trails: Prompts, outputs, reviewers, and activation endpoints retained per 21 CFR Part 11.

For teams operationalizing GenAI, a short checklist helps keep practice aligned with policy.

Safe GenAI In Pharma Content — Quick Checklist

Use only approved claims, strip PHI, auto-link citations, apply frequency caps, and retain full review logs—this is the “every time” standard.

- Uses only approved, versioned claims as context

- PHI/PII removed or tokenized prior to processing

- Output auto-linked to citations; zero “net-new medical claims”

- Frequency/channel caps applied automatically

- Full review log for MLR with e-signatures and timestamps

With plumbing and safeguards in place, we can tailor practical channel playbooks for both HCPs and patients.

Channel Playbooks For HCPs And Patients

Different audiences, different levers: adaptive CLM/email for HCP objections and consent-based CRM/SMS for patient initiation and adherence.

HCP Engagement

- Email/CLM: Adaptive detail aids, objection-based follow-ups triggered by rep notes.

- EHR/POC: Context-aware messages filtered by audience and label.

- Programmatic HCP/Pro Social: Cadence optimized to predicted fatigue.

- Field Force: NBA call lists; content packs aligned to likely objections.

Patient Engagement

- Consent-Based CRM/SMS: Initiation/adherence nudges anchored to refill windows.

- Access & Affordability: Copay messaging when claims reject or benefits change.

- Support Hubs: Nurse scripts enriched by risk models without exposing PHI to vendors.

- Dynamic Site Experience: Geographies and access profiles drive compliant personalization.

Channel × Journey Stage × KPI (Illustrative)

To keep teams aligned, we’ll tie channels to journey stages, triggers, and measurable outcomes.

| Audience | Journey Stage | Primary Channel(s) | Example Trigger | KPI |

| HCP | Awareness | EHR/POC, Pro Social | Relevant patient flag in EHR | EHR message acceptance rate |

| HCP | Consideration | CLM, Email, Rep Call | Objection detected in rep notes | Detail depth, follow-up open rate |

| Patient | Initiation | SMS/Email, Hub Outreach | First fill predicted at risk | NBRx conversion, time-to-start |

| Patient | Adherence | SMS/Email, Hub | Missed refill window | Persistency, abandonment rate |

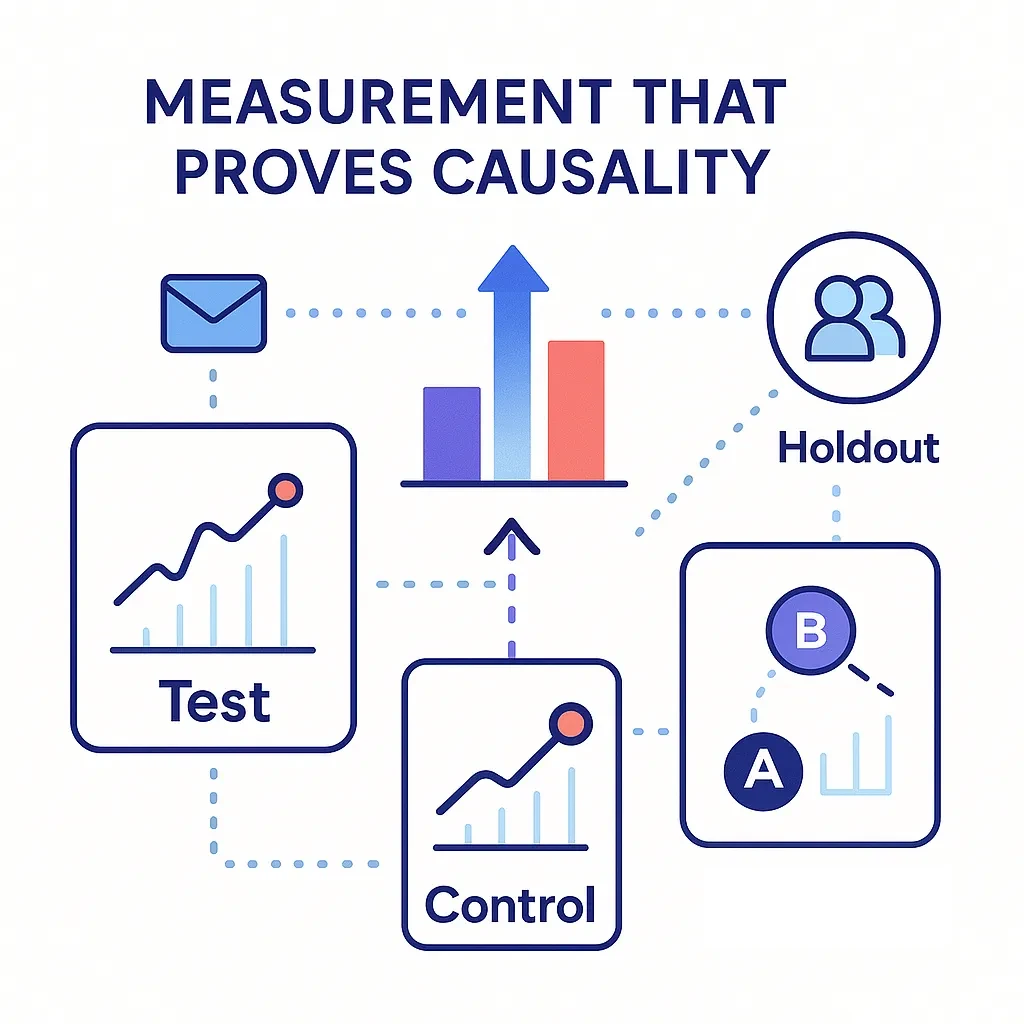

Measurement That Proves Causality

Primary outcomes (NBRx/TRx, time-to-treatment, persistency) and operational signals roll into experiments — staggered rollouts and NPI clusters — that isolate lift.

- Primary Outcomes: NBRx/TRx lift, time-to-treatment, persistency, abandonment reduction.

- Operational Signals: Qualified HCP reach, content depth, EHR acceptance, call quality.

- Experiment Design: Staggered rollouts, NPI cluster randomization, synthetic controls for territories.

- Model Monitoring: Drift detection, decay alerts, fairness by specialty/geography/access status.

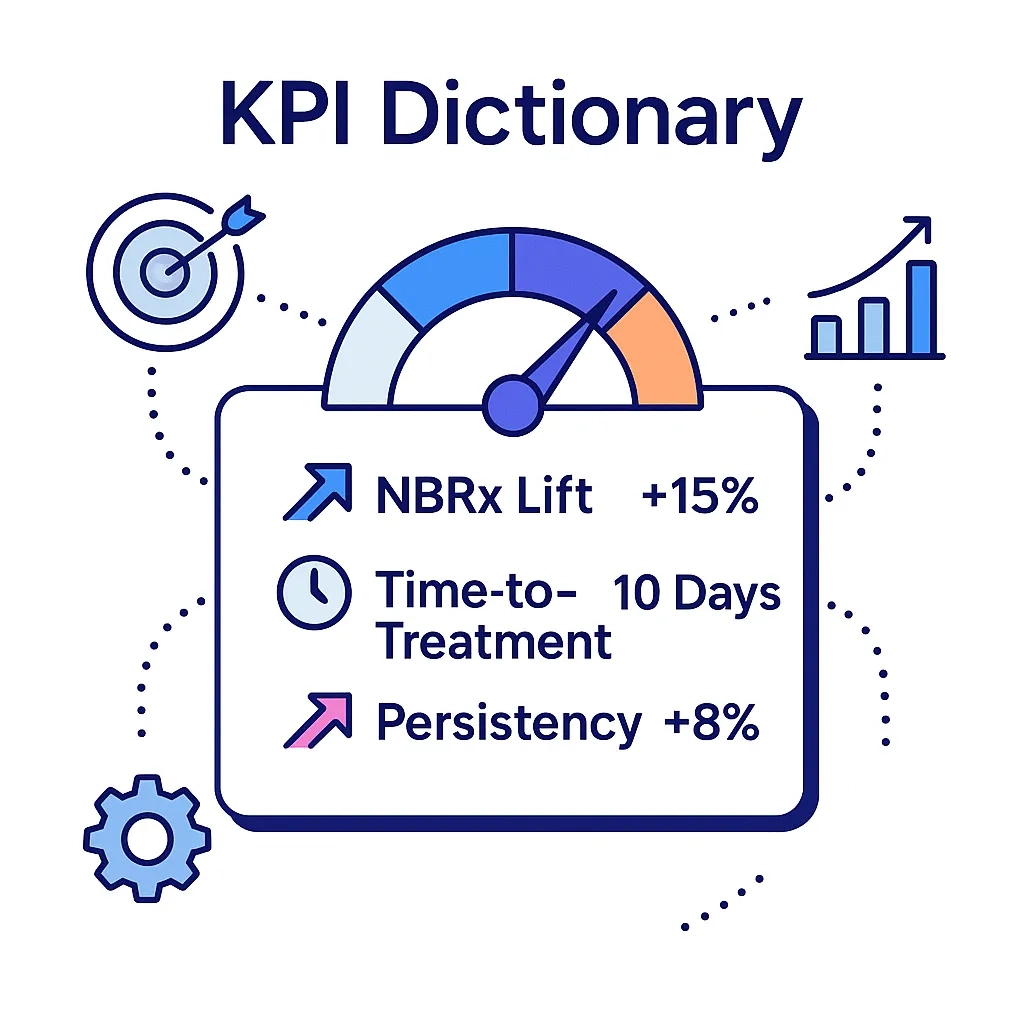

KPI Dictionary (Illustrative)

To standardize reporting, we’ll define each KPI, its source, target, and owner.

| KPI | Definition | Formula/Source | Target Example | Owner | Cadence |

| NBRx Lift | New-to-brand prescriptions vs control | Test vs control delta | +6–10% | Brand/DS | Monthly |

| Time-To-Treatment | Days from Dx to first fill | Claims with inferred Dx | −15% | Insights | Monthly |

| Persistency | % patients on therapy at 90/180 days | Claims longitudinal | +5–8 pts | Patient Ops | Quarterly |

| EHR Acceptance Rate | % accepted/qualified EHR prompts | EHR vendor logs | +3–5 pts | HCP Media | Monthly |

| Rep Call Quality | Objection addressed + follow-up completed | CLM + call notes | +10% | Sales Ops | Monthly |

Governance, Risk, And Compliance (By Design)

Proving value is half the job; the other half is staying within regulatory and ethical lines by default.

- Regulatory Anchors: HIPAA, FDA promotional regulations, 21 CFR Part 11, GDPR/CPRA.

- Human-In-The-Loop: MLR review on any AI-assisted copy prior to activation.

- Bias/Fairness: Evaluate outcomes by specialty, geography, and access; document mitigations.

- Vendor Due Diligence: DPAs, sub-processor lists, SOC 2/HITRUST, data residency disclosures.

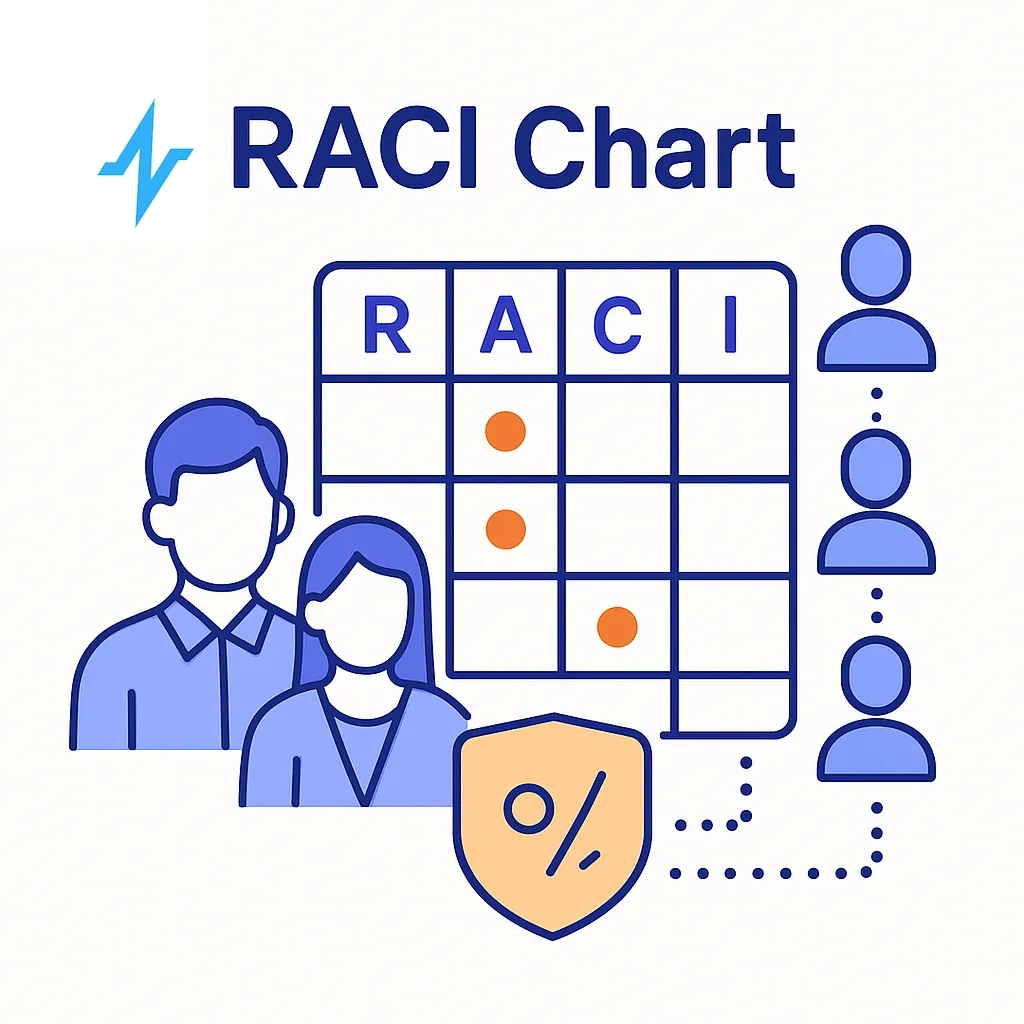

RACI For Data-Driven Engagement

| Activity | Brand | Medical/Legal/Reg | Data Science | IT/Sec | Field | Vendor |

| Claims Library Stewardship | R | A | C | C | C | I |

| Audience & Policy Guardrails | A | A | C | C | I | I |

| Model Dev & Monitoring | C | I | A | C | I | C |

| Content Variant Generation | A | A | C | I | C | C |

| Channel Activation | A | I | C | C | C | R |

| Audit & Evidence Retention | C | A | C | A | I | I |

A = Accountable, R = Responsible, C = Consulted, I = Informed

Operating Model For AI-Driven Engagement

A centralized CoE, two-week experimentation sprints, enablement assets, and budget carved out for always-on tests keep improvements compounding.

- Center Of Excellence: Data science, marketing ops, engineering, and MLR enablement as one team.

- Experimentation Sprints: Two-week cycles with pre-committed holdouts; results shared in business terms.

- Enablement: Prompt libraries, claims tagging, and an “approved facts” knowledge base.

- Budgeting: Shift a portion of media/production into always-on testing funds.

To get momentum quickly, we’ll lay out a three-phase plan from inventory to MVP to scale.

Implementation Roadmap: 90 Days To First Lift

| Phase | Weeks | Focus Areas | Milestones |

| Foundation | 0–4 | Data inventory, consent audit, feature store MVP, baseline KPIs | Data map signed off; guardrails live; dashboards baseline |

| MVP | 5–8 | One HCP and one patient use case; uplift test; modular content with MLR | First experiment in market; variant production < 5 days |

| Scale | 9–13 | Expand to 3–5 segments; EHR/POC trigger; NBA for field; MMM+MTA triangulation | Documented lift; playbook for rollout; refresh plan |

Case-Style Scenarios (Anonymized)

To show the roadmap in the wild, we’ll review anonymized wins that mirror common brand challenges.

- HCP Decile Shift: Predicted decile growth in community cardiology → EHR prompt + rep follow-up → +8% NBRx in randomized test geos.

- Initiation Risk: Patients flagged as high abandonment risk at first fill → affordability SMS + hub outreach → −12% drop-off.

- Access Intervention: Payer denial detected → dynamic copay messaging and prior-auth checklist → +9% re-submission success.

Build Vs. Buy: A Practical Decision Framework

Balance security/PHI handling, transparency, integration effort, MLR fit, and total cost. Many teams keep decisioning in-house and use vendor channels.

| Criterion | Build In-House | Buy Platform | Hybrid (Common) |

| Security/PHI Handling | Max control; slower time-to-value | Vendor attestations; faster start | Keep decisioning in-house; use vendor channels |

| Transparency | Full model visibility | Varies by vendor | In-house uplift logic + vendor delivery |

| Integration Effort | High (Veeva, EHR/POC, CRM) | Vendor connectors | Prioritize 2–3 critical integrations |

| MLR Workflow Fit | Customizable | Depends on plugin ecosystem | MLR in-house; vendor for generation UX |

| Total Cost Of Ownership | Higher upfront, lower long-run | Lower upfront, variable over time | Balanced, with optional switchability |

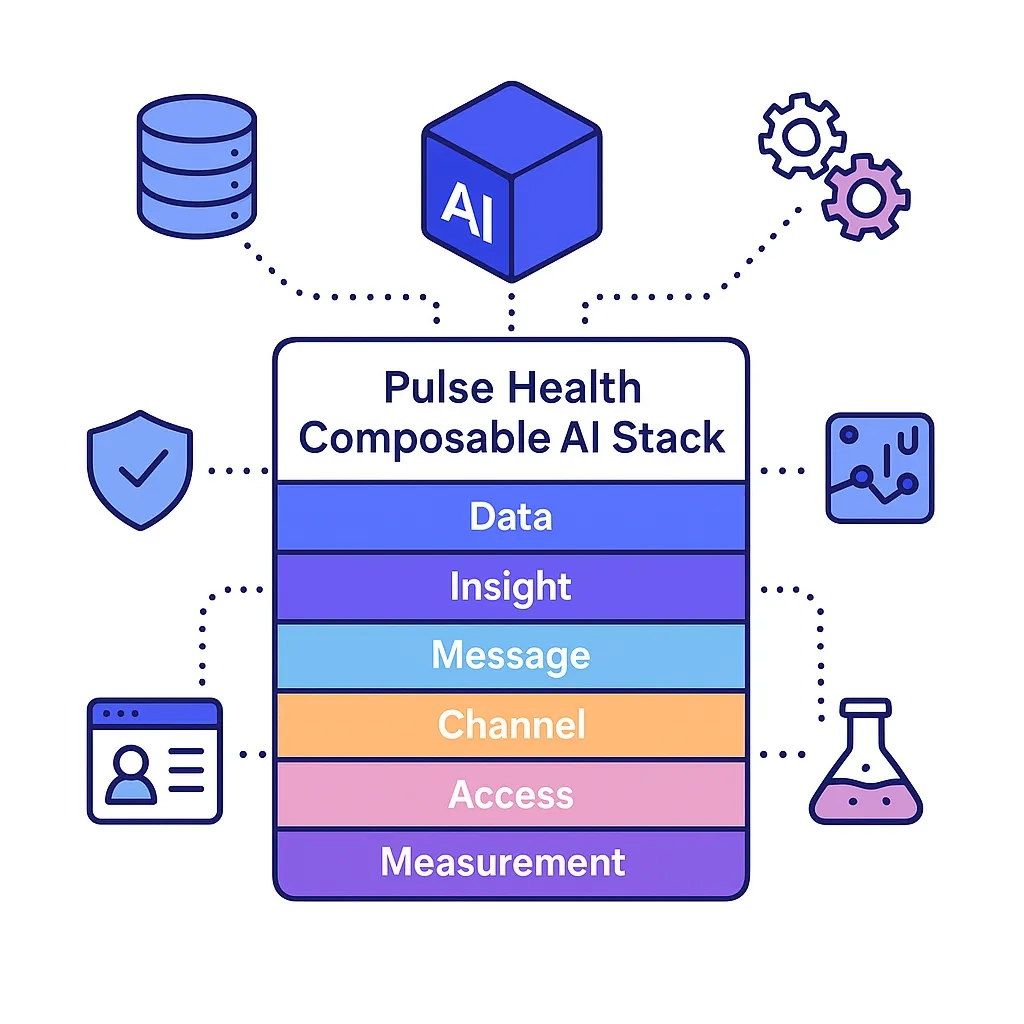

Pulse Health Point Of View

Pulse Health maps The Six Pillars To Prescription Growth — Data, Insight, Message, Channel, Access, Measurement — to a composable AI stack:

- Pre-Built Integrations: Veeva/CLM, EHR/POC partners, CRM/email, and call center — activated only with consent.

- Composable Governance: Claims library, audit logs, and MLR connectors ensure every variant is explainable.

- Experimentation Cadence: Holdouts by default, uplift as the north-star metric, and monthly fairness reviews.

- Speed With Safety: GenAI is restricted to approved facts via retrieval; all outputs are traceable and MLR-ready.

Next, we’ll summarize why the platform’s architecture and controls specifically fit regulated commercial use.

Why Pulse Health Is Built For Pharma-Grade AI

From Veeva/EHR/CRM connectors and clean-room options to uplift-first decisioning and consent controls, the design optimizes both speed and safety.

- Composable stack aligned to The Six Pillars To Prescription Growth — Data, Insight, Message, Channel, Access, Measurement — so every experiment ladders to TRx.

- Pre-built integrations (Veeva/CLM, EHR/POC partners, CRM/email, call center) speed time-to-value without compromising consent controls.

- Clean-room and tokenization options for PHI/PII ensure activation stays compliant across audience building, modeling, and measurement.

- Uplift-first decisioning targets incremental lift rather than raw response, reducing waste and improving causal proof.

Platform principles translate into day-to-day workflows—especially in content and review.

How Pulse Health Operationalizes AI With Compliance

Claims-bound generation via retrieval, MLR-ready change logs and e-signatures, centralized policy guardrails, and end-to-end audit trails turn compliance into muscle memory.

- Claims-Bound Generation: GenAI is retrieval-augmented from an approved claims library; all copy variants retain citations to evidence.

- MLR-Ready Workflows: Automatic change logs, redlines, reviewer stamps, and e-signature support mapped to 21 CFR Part 11.

- Policy Guardrails: Frequency caps, audience exclusions, and channel rotation are enforced centrally—before activation.

- End-To-End Auditability: Every prompt, output, approver, and activation endpoint is traceable for audits and vendor assessments.

So what should leaders expect after two quarters of disciplined execution?

What Good Looks Like In Six Months

You should see measurable TRx lift in targeted segments, faster time-to-start, higher persistency, sub-two-week content cycles, monitored models, and documented consent lineage.

- Commercial Lift: +6–10% NBRx/TRx in targeted segments; −15% time-to-initiation; +5–8-pt persistency.

- Operational Velocity: Sub-two-week content variant turnaround; quarterly model refresh; automated drift alerts.

- Compliance Confidence: Documented consent lineage, complete review trails, and bias reporting by segment.

If this operating model aligns with your goals, the fastest way to start is a short diagnostic that picks the two or three use cases with the highest near-term lift.

Get Started with Pulse Health Today

Book A 30-Minute AI Engagement Diagnostic. We’ll map your data, prioritize 2–3 high-lift use cases, and deliver a 90-day roadmap aligned to MLR and brand goals — so your teams move faster with confidence.